The publication of the Likely Exploited Vulnerabilities (LEV) metric by researchers from CISA and NIST represents a well-intentioned effort to enhance the prioritization of vulnerability remediation. It aims to augment existing approaches like the Known Exploited Vulnerabilities (KEV) catalog and the Exploit Prediction Scoring System (EPSS). While LEV provides a probabilistic framework that leverages temporal data to predict exploitation, its introduction adds yet another layer to an already fragmented vulnerability intelligence ecosystem without fundamentally addressing the root limitations in exploit predictability.

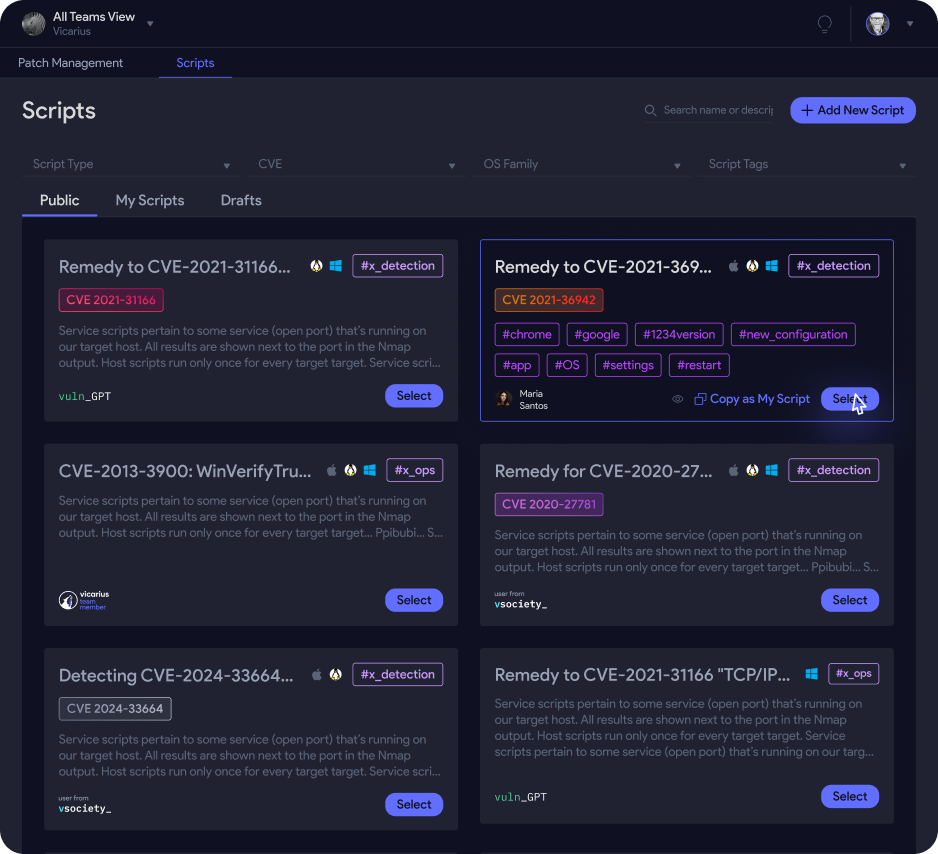

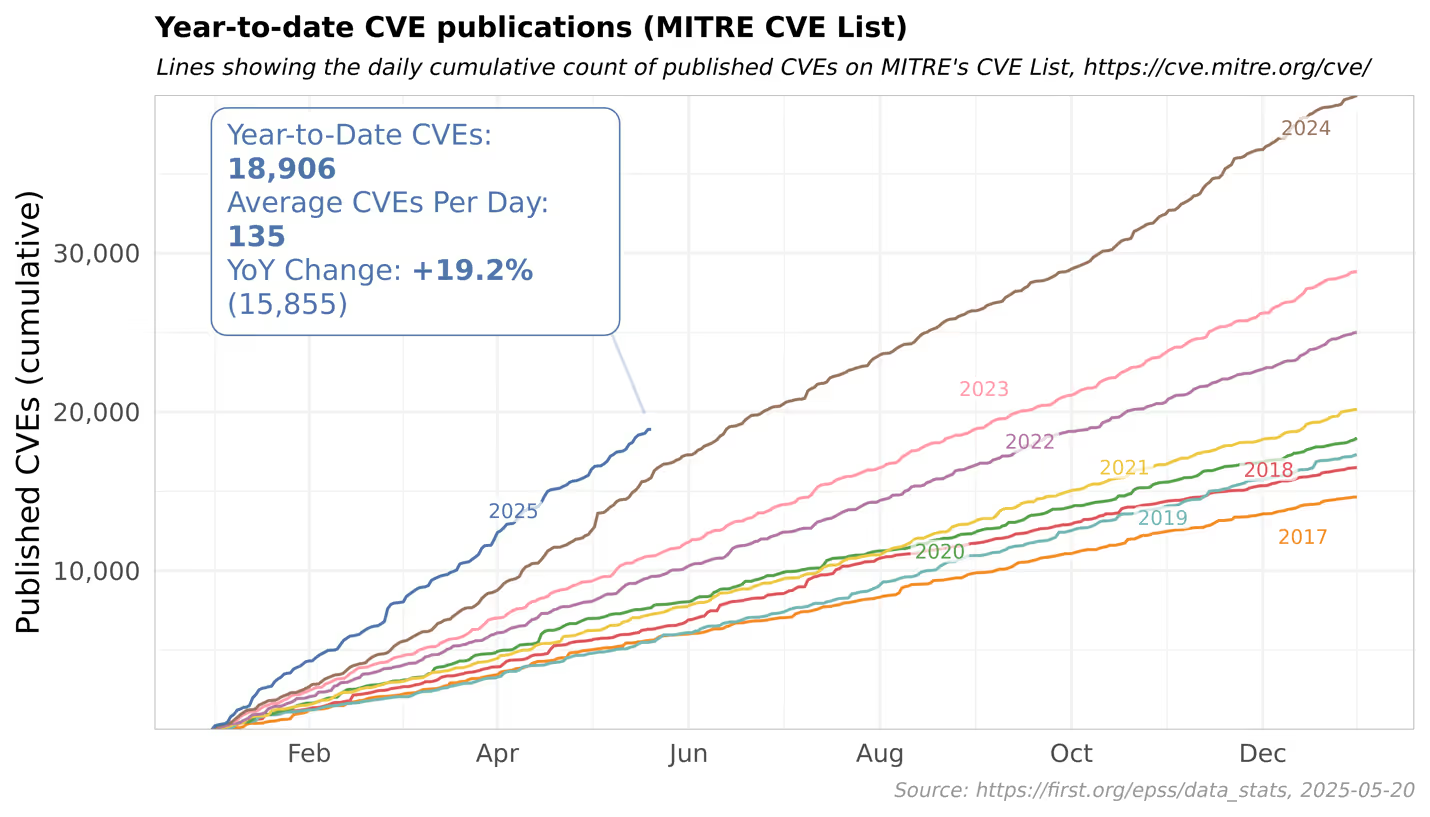

This visual illustrates the exponential rise in disclosed vulnerabilities, projected to surpass 50,000 annually. This trend highlights the scale and velocity problem LEV does not solve: it doesn’t reduce the volume of vulnerabilities or enable real-time prioritization at scale.

The Limitations of LEV

From a professional standpoint, the core problem LEV attempts to solve identifying which vulnerabilities are likely to be exploited is valid. However, LEV, like its predecessors, suffers from several inherent limitations:

- Dependence on Lagging Indicators:

LEV relies heavily on historical data points such as EPSS scores and KEV publication dates. This inherently lags behind active exploitation. While longitudinal EPSS scoring provides more context than a single score snapshot, it still cannot provide real-time exploitation signals.

- Over-reliance on Publicly Available Data:

Both LEV and EPSS use data that is publicly accessible or derived from open sources. These do not capture zero-day exploitation, private threat intelligence, or adversary behaviors in closed forums. The absence of real-time telemetry limits the practical predictive capability.

- Another Score, Not a Signal:

LEV is ultimately another numerical probability layered onto existing scores. It does not offer a fundamentally new detection or prediction method. Organizations now must choose between CVSS, EPSS, KEV, and LEV with no guarantee that stacking these together improves decision-making. In fact, the added complexity may increase cognitive and operational load for already resource-constrained security teams.

- Lack of Contextual Weighting:

LEV assumes a generic risk model that does not account for environmental context, asset criticality, exposure, or compensating controls. This makes the metric less useful when integrated into actual remediation workflows that prioritize business impact over theoretical likelihood.

What Needs to Be Done Instead

Rather than adding another score, the industry needs a shift in how exploit prediction is approached. Below are practical, implementable suggestions that move beyond static metrics toward dynamic, operationally integrated insights.

1. Live Exploit Telemetry from Global Honeypots and Deception Networks

The most actionable prediction model is built on real-time exploitation data. Global honeypot grids especially those embedded across cloud, edge, and IoT environments can detect exploitation attempts within minutes of vulnerability disclosure. Deception technologies (decoys, traps) deployed across diverse environments can feed telemetry into a centralized intelligence feed that tracks attempted exploits in the wild. This shifts from predicting based on metadata to observing attacker behavior in real-time.

Action: National-level CERTs or vendor coalitions should invest in and share access to federated deception networks. These systems should auto-tag vulnerabilities observed in exploitation chains, feeding this data to vendors and risk engines.

2. Dark Web and Closed Forum Exploit Monitoring

Much of the exploit trade happens in non-indexed, private areas of the internet. Advanced threat intelligence platforms can extract patterns of exploit discussions, PoC sales, or kit advertisements and correlate them with known CVEs. This form of intent-based intelligence derived from adversary interest can predict exploitation likelihood well before public tools emerge.

Action: Enrich vulnerability data with adversary intent signals. For example, if a CVE is being actively discussed in three known ransomware affiliate forums, its risk score should spike, regardless of whether it’s in KEV or has an EPSS score.

3. Integration with Exploit Development Milestones

A vulnerability is more likely to be exploited once key exploit components are available. These include:

- Public proof-of-concept code

- Integration into popular frameworks (e.g., Metasploit, Cobalt Strike)

- Availability of automated scanners with signatures for the vulnerability

By tracking these development milestones, an exploit lifecycle index can be built to assess how close a vulnerability is to mass exploitation.

Action: Maintain an exploit readiness index per CVE, based on observed technical exploit progressions. This should weigh more heavily than generalized CVE metadata.

4. Asset-Aware Risk Modeling with Local Exploitability Insights

Global probability scores fail to capture the risk at the local environment level. For example, a critical RCE vulnerability may be unlikely to be exploited globally but pose a severe risk if it affects a publicly exposed asset with no mitigations. Similarly, lateral movement exploits may be irrelevant in a segmented network.

Action: Exploit likelihood scores should be dynamically adjusted based on:

- Network exposure (e.g., is the service internet-facing?)

- Authentication requirements

- Compensating controls in place (e.g., WAF, EDR)

- Asset classification (e.g., domain controller, crown jewel system)

This contextualization makes prioritization actionable and tailored.

5. Machine Learning Models Based on Post-Exploitation Telemetry

Instead of relying purely on pre-exploitation indicators, telemetry from actual breaches (endpoint forensics, SIEM logs, threat hunting reports) can feed supervised learning models. These models can be trained to identify vulnerability characteristics that consistently appear in real-world attacks.

Action: Aggregate anonymized data from incident response engagements to train real-world exploitation likelihood models. These should evolve as attacker tradecraft changes.

Final words

While LEV contributes incremental value, it is insufficient as a standalone breakthrough. The future of exploit prediction lies in real-time behavioral signals, adversary intent intelligence, contextual asset risk modeling, and exploit development tracking not more static probabilities based on outdated metadata. To move the industry forward, security teams and vendors must focus on dynamic, signal-driven exploit forecasting integrated directly into operational workflows. Only then can we meaningfully close the gap between vulnerability disclosure and secure remediation.

%20Signals%20a%20New%20Era%20of%20Supply%20Chain%20Risk.png)